Microsoft

Microsoft is putting a stop to its sketchy AI emotion recognition

Emotion recognition is gross and shouldn’t be something monitored.

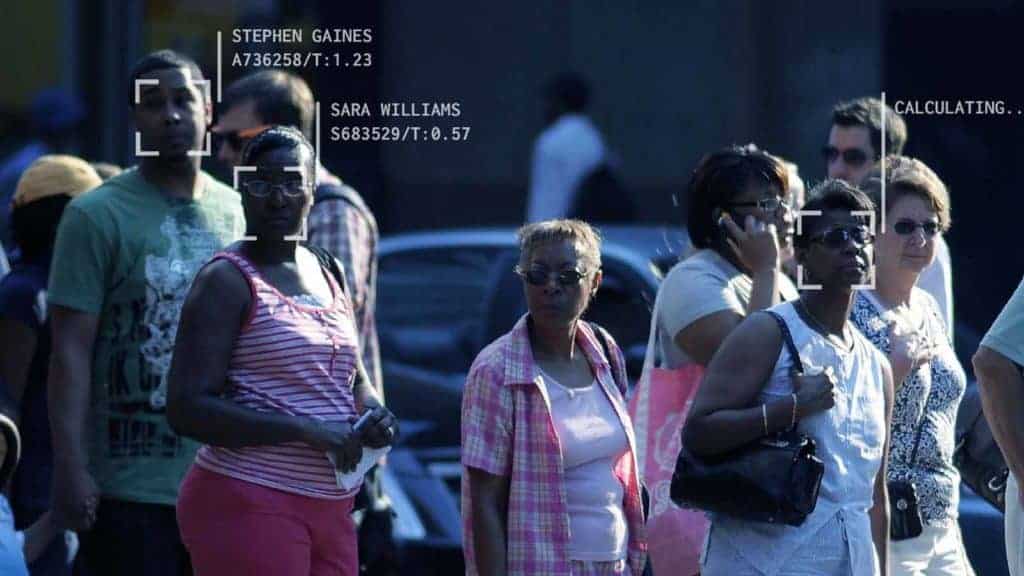

Facial recognition is a hot-button topic and Microsoft is now pivoting its AI projects by ending its emotion recognition technology.

Microsoft is currently working on improving its ethics policies. Part of that update includes a new version of its Responsible AI Standards. The company defines this new Standard as a multi-year project to “define product development requirements for responsible AI.”

As part of this, the company is stopping (for the time being) its emotion response recognition projects available to Azure clients.

READ MORE: DALL-E 2, the AI that creates images for you, expands beta tests

So, why is Microsoft halting the project? One of the main reasons is no one really knows how to define emotion accurately, according to Natasha Crampton, Microsoft’s Chief Responsible AI Officer (h/t Gizmodo).

Because of this, the AI systems would be unable to accurately predict what emotions people are displaying. And, besides that, emotion recognition is just gross and shouldn’t be something businesses care about.

Companies have already tried to force workers to put a smile on their faces before meetings. Microsoft’s decision to put a halt to its Azure Face facial recognition services is a smart one.

Facial recognition is full of problems. Often, these systems can be trained using improper datasets and the use of facial recognition can lead to discrimination, misinformation, and privacy concerns.

Have any thoughts on this? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- Microsoft stops serving Windows downloads to Russian users

- This search engine is basically Google but for facial recognition

- It’s 2022 and Google Drive finally supports Copy and Paste

- Microsoft accidentally let anyone upgrade to Windows 11, but the bug has been fixed