News

Facebook only took a decade to decide it should warn users about extremist content

In case you were wondering if the site cares about you

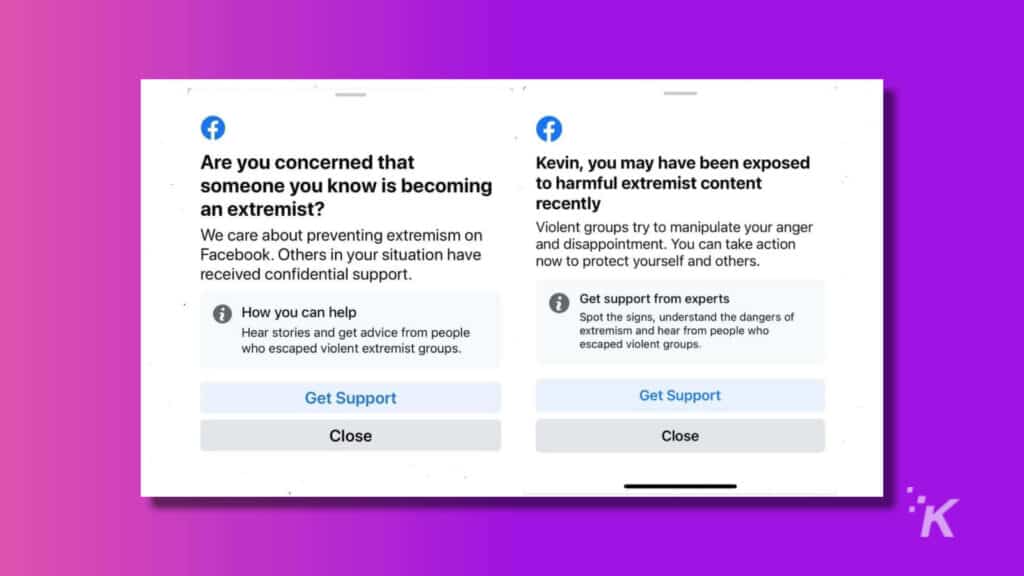

Facebook has taken to victim-blaming, with new prompts to get help aimed at those who might have been exposed to “violent extremist content” on the platform. So yes, instead of actually dealing with the slew of hateful content on its own service, Facebook is putting a band-aid over the affected users to assuage its own guilt.

Well, I assume it comes from a place of guilt. But, maybe I’m being too generous, and it’s really coming from a place of “please don’t investigate us again, Mr. Government.”

I mean, we shouldn’t attribute any “best intentions” to a site that “actively promotes” Holocaust deniers, wants to pipe advertising onto your eyeballs, wants to put not one but two cameras on your wrist, allowed politicians to spew hateful lies, tracks you everywhere even when you tell it not to, won’t leave your kids alone (or stop other people from abusing your kids), let hackers scrape your phone number (at least twice), and has eroded western democracy through inaction.

The prompts about being exposed to radical extremist views don’t just show up for the affected Facebook user. Oh no, they show up for their friends and family too, except this time they say, “Are you concerned that someone you know is becoming an extremist?” Isn’t that the same as telling people to turn in their family to the feds? Maybe work on removing that extremist content faster, Zuckerberg, instead of putting the blame on users and their closest friends and family.

In a statement to The Verge, a Facebook spokesperson said that the test is part of the company’s “larger work to assess ways to provide resources and support to people on Facebook who may have engaged with or were exposed to extremist content, or may know someone who is at risk.”

The real problem here is while social media sites do have policies against extremist content, they default to the governmental designations for actually enforcing those rules.

That’s a problem for many reasons, not the least of which social media sites are effectively doing the political wants of the government, and not policing all hateful content, regardless of the source. The other is that the US has no list of domestic terror organizations, making Facebook’s efforts to root out extremist content harder under their current operating methods.

The solution? Perhaps Facebook and other social media sites with worldwide reach could all agree on a common definition of what extremist content is, regardless of color, creed or country, and ban any infringements. That could also provide a common definition for governments worldwide, making practice inform policy.

Have any thoughts on this? Let us know down below in the comments or carry the discussion over to our Twitter or Facebook.

Editors’ Recommendations:

- Facebook is working on some terrible augmented reality hats

- Bulletin, Facebook’s newsletter platform, has now launched in beta

- Facebook avoided an antitrust case in the US and now the company is worth $1 trillion

- Facebook’s first VR ad partner for Oculus has already backed out